Increase Service Bus Session Processor Throughput

My application was processing Service Bus messages at a really slow pace, in a scenario where message sessions, based on the session ID, are pretty much unique.

The message session mechanism is used to achieve FIFO message delivery. In my case, the session ID represents a customer ID. It’s useful to process customer-related messages in order, but in most cases, same-customer messages are far apart.

I realized how bad the situation was when producers started failing, because the topic’s max size (5GB) was hit.

The code below is C#, but the Service Bus SDK is similar in other ecosystems like Java. For DI purposes,

your ServiceBusClient and ServiceBusSessionProcessor should be registered as singletons. If you’re dealing with

more than one topic/subscription, you can register multiple keyed singletons with AddKeyedSingleton.

var client = new ServiceBusClient("my-connection-string");

var opts = new ServiceBusSessionProcessorOptions

{

ReceiveMode = ServiceBusReceiveMode.PeekLock,

AutoCompleteMessages = false,

MaxConcurrentSessions = 20,

};

var sessionProcessor = client.CreateSessionProcessor("my-topic", "my-subscription", opts);

At first, I didn’t give much thought to MaxConcurrentSessions = 20. It was just a number I came up with after reading

the official sample code.

The application in question is running in multiple pods in Kubernetes. Since the work involved in message processing is lightweight, I figured processing 20 messages at roughly the same time, per pod, would be enough.

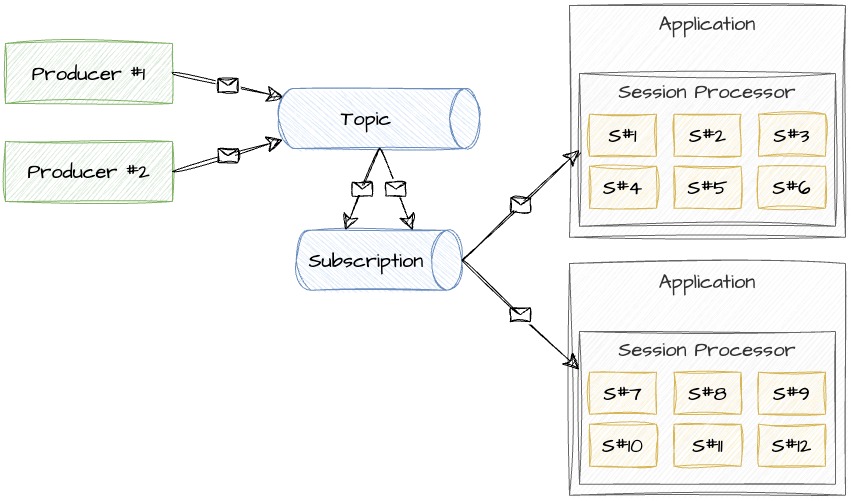

In fact, let’s reduce MaxConcurrentSessions to 6. The diagram below shows exactly that case.

Let’s say producers push 36 messages, each with a unique session ID. Suppose it takes 200ms to process a single message. With this setup, you’re gonna see each application instance process 6 messages, almost instantly. This translates to 6 different open sessions per instance.

But then… it pauses. It doesn’t process the remaining messages for a while. Is it stuck? Is there a bug in the SDK? Is it a network issue? Hard to tell.

After a while, each instance processes 6 more messages, and then it pauses again. Looks like a pattern. But it’s way more difficult to figure out why these hiccups happen in a busy production environment with more instances, as they are booted up at slightly different times, so processing starts and pauses at different times.

It’s one of those cases where you’re offered a shiny, rather high-level SDK, that you’re only supposed to configure correctly. But configuration varies wildly based on the throughput you require. And you can only give a guesstimate for that throughput before your app hits production.

It turns out that a session, once open (like after receiving a message), will remain idle for a set amount of time,

waiting for a new message. Or, if the message takes too long to process, the session will also time out. The option

controlling this is called SessionIdleTimeout, which is set to 1 minute by default. This is exactly the pause

from before.

Given a situation where most of your messages have unique session IDs, throughput can be calculated like this:

number_of_application_instances * max_concurrent_sessions / session_idle_timeout

e.g., 4 instances, with MaxConcurrentSessions = 100, and SessionIdleTimeout = TimeSpan.FromSeconds(30) gives you

roughly 800 messages per minute.

Adjust as needed.

Read more: